Add README and documentation to VirtualCamera

Add some inline documentation and a README file to document the

internals of VirtualCamera.

Test: N/A

Bug: N/A

Flag: DOCS_ONLY

Change-Id: I77670ab06a2fb65d16504ffe5d82d6c652948e29

diff --git a/services/camera/virtualcamera/README.md b/services/camera/virtualcamera/README.md

new file mode 100644

index 0000000..04b4811

--- /dev/null

+++ b/services/camera/virtualcamera/README.md

@@ -0,0 +1,164 @@

+# Virtual Camera

+

+The virtual camera feature allows 3rd party application to expose a remote or

+virtual camera to the standard Android camera frameworks (Camera2/CameraX, NDK,

+camera1).

+

+The stack is composed into 4 different parts:

+

+1. The **Virtual Camera Service** (this directory), implementing the Camera HAL

+ and acts as an interface between the Android Camera Server and the *Virtual

+ Camera Owner* (via the VirtualDeviceManager APIs).

+

+2. The **VirtualDeviceManager** running in the system process and handling the

+ communication between the Virtual Camera service and the Virtual Camera

+ owner

+

+3. The **Virtual Camera Owner**, the client application declaring the Virtual

+ Camera and handling the production of image data. We will also refer to this

+ part as the **producer**

+

+4. The **Consumer Application**, the client application consuming camera data,

+ which can be any application using the camera APIs

+

+This document describes the functionalities of the *Virtual Camera Service*

+

+## Before reading

+

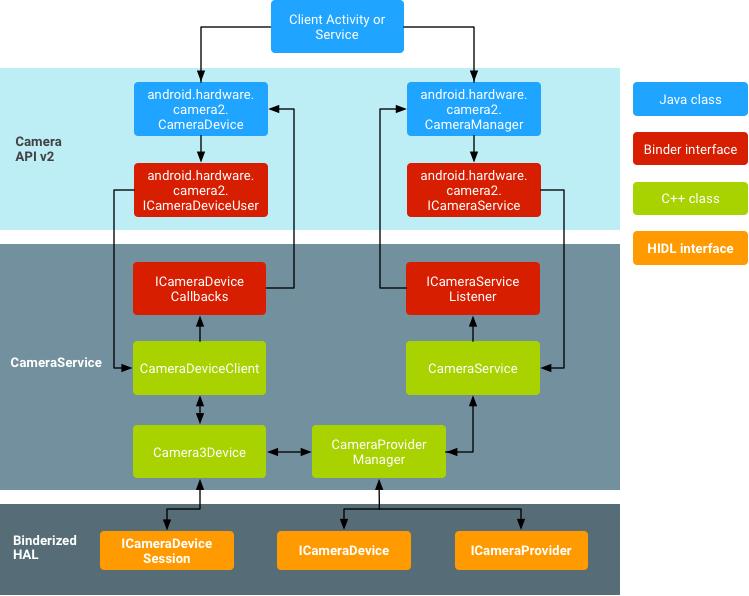

+The service implements the Camera HAL. It's best to have a bit of an

+understanding of how it works by reading the

+[HAL documentation first](https://source.android.com/docs/core/camera)

+

+

+

+The HAL implementations are declared in: -

+[VirtualCameraDevice](./VirtualCameraDevice.h) -

+[VirtualCameraProvider](./VirtualCameraProvider.h) -

+[VirtualCameraSession](./VirtualCameraSession.h)

+

+## Current supported features

+

+Virtual Cameras report `EXTERNAL`

+[hardware level](https://developer.android.com/reference/android/hardware/camera2/CameraCharacteristics#INFO_SUPPORTED_HARDWARE_LEVEL)

+but some

+[functionalities of `EXTERNAL`](https://developer.android.com/reference/android/hardware/camera2/CameraMetadata#INFO_SUPPORTED_HARDWARE_LEVEL_EXTERNAL)

+hardware level are not fully supported.

+

+Here is a list of supported features - Single input multiple output stream and

+capture:

+

+- Support for YUV and JPEG

+

+Notable missing features:

+

+- Support for auto 3A (AWB, AE, AF): virtual camera will announce convergence

+ of 3A algorithm even though it can't receive any information about this from

+ the owner.

+

+- No flash/torch support

+

+## Overview

+

+Graphic data are exchanged using the Surface infrastructure. Like any other

+Camera HAL, the Surfaces to write data into are received from the client.

+Virtual Camera exposes a **different** Surface onto which the owner can write

+data. In the middle, we use an EGL Texture which adapts (if needed) the producer

+data to the required consumer format (scaling only for now, but we might also

+add support for rotation and cropping in the future).

+

+When the client application requires multiple resolutions, the closest one among

+supported resolutions is used for the input data and the image data is down

+scaled for the lower resolutions.

+

+Depending on the type of output, the rendering pipelines change. Here is an

+overview of the YUV and JPEG pipelines.

+

+**YUV Rendering:**

+

+```

+Virtual Device Owner Surface[1] (Producer) --{binds to}--> EGL

+Texture[1] --{renders into}--> Client Surface[1-n] (Consumer)

+```

+

+**JPEG Rendering:**

+

+```

+Virtual Device Owner Surface[1] (Producer) --{binds to}--> EGL

+Texture[1] --{compress data into}--> temporary buffer --{renders into}-->

+Client Surface[1-n] (Consumer)

+```

+

+## Life of a capture request

+

+> Before reading the following, you must understand the concepts of

+> [CaptureRequest](https://developer.android.com/reference/android/hardware/camera2/CaptureRequest)

+> and

+> [OutputConfiguration](https://developer.android.com/reference/android/hardware/camera2/OutputConfiguration).

+

+1. The consumer creates a session with one or more `Surfaces`

+

+2. The VirtualCamera owner will receive a call to

+ `VirtualCameraCallback#onStreamConfigured` with a reference to another

+ `Suface` where it can write into.

+

+3. The consumer will then start sending `CaptureRequests`. The owner will

+ receive a call to `VirtualCameraCallback#onProcessCaptureRequest`, at which

+ points it should write the required data into the surface it previously

+ received. At the same time, a new task will be enqueued in the render thread

+

+4. The [VirtualCameraRenderThread](./VirtualCameraRenderThread.cc) will consume

+ the enqueued tasks as they come. It will wait for the producer to write into

+ the input Surface (using `Surface::waitForNextFrame`).

+

+ > **Note:** Since the Surface API allows us to wait for the next frame,

+ > there is no need for the producer to notify when the frame is ready by

+ > calling a `processCaptureResult()` equivalent.

+

+5. The EGL Texture is updated with the content of the Surface.

+

+6. The EGL Texture renders into the output Surfaces.

+

+7. The Camera client is notified of the "shutter" event and the `CaptureResult`

+ is sent to the consumer.

+

+## EGL Rendering

+

+### The render thread

+

+The [VirtualCameraRenderThread](./VirtualCameraRenderThread.h) module takes care

+of rendering the input from the owner to the output via the EGL Texture. The

+rendering is done either to a JPEG buffer, which is the BLOB rendering for

+creating a JPEG or to a YUV buffer used mainly for preview Surfaces or video.

+Two EGLPrograms (shaders) defined in [EglProgram](./util/EglProgram.cc) handle

+the rendering of the data.

+

+### Initialization

+

+[EGlDisplayContext](./util/EglDisplayContext.h) initializes the whole EGL

+environment (Display, Surface, Context, and Config).

+

+The EGL Rendering is backed by a

+[ANativeWindow](https://developer.android.com/ndk/reference/group/a-native-window)

+which is just the native counterpart of the

+[Surface](https://developer.android.com/reference/android/view/Surface), which

+itself is the producer side of buffer queue, the consumer being either the

+display (Camera preview) or some encoder (to save the data or send it across the

+network).

+

+### More about OpenGL

+

+To better understand how the EGL rendering works the following resources can be

+used:

+

+Introduction to OpenGL: https://learnopengl.com/

+

+The official documentation of EGL API can be queried at:

+https://www.khronos.org/registry/egl/sdk/docs/man/xhtml/

+

+And using Google search with the following query:

+

+```

+[function name] site:https://registry.khronos.org/EGL/sdk/docs/man/html/

+

+// example: eglSwapBuffers site:https://registry.khronos.org/EGL/sdk/docs/man/html/

+```

diff --git a/services/camera/virtualcamera/VirtualCameraRenderThread.cc b/services/camera/virtualcamera/VirtualCameraRenderThread.cc

index 08b8e17..3557791 100644

--- a/services/camera/virtualcamera/VirtualCameraRenderThread.cc

+++ b/services/camera/virtualcamera/VirtualCameraRenderThread.cc

@@ -679,7 +679,7 @@

return {};

}

- // TODO(b/324383963) Add support for letterboxing if the thumbnail size

+ // TODO(b/324383963) Add support for letterboxing if the thumbnail sizese

// doesn't correspond

// to input texture aspect ratio.

if (!renderIntoEglFramebuffer(*framebuffer, /*fence=*/nullptr,

@@ -753,6 +753,7 @@

PlanesLockGuard planesLock(hwBuffer, AHARDWAREBUFFER_USAGE_CPU_READ_RARELY,

fence);

if (planesLock.getStatus() != OK) {

+ ALOGE("Failed to lock hwBuffer planes");

return cameraStatus(Status::INTERNAL_ERROR);

}

@@ -760,23 +761,35 @@

createExif(Resolution(stream->width, stream->height), resultMetadata,

createThumbnail(requestSettings.thumbnailResolution,

requestSettings.thumbnailJpegQuality));

+

+ unsigned long outBufferSize = stream->bufferSize - sizeof(CameraBlob);

+ void* outBuffer = (*planesLock).planes[0].data;

std::optional<size_t> compressedSize = compressJpeg(

stream->width, stream->height, requestSettings.jpegQuality,

- framebuffer->getHardwareBuffer(), app1ExifData,

- stream->bufferSize - sizeof(CameraBlob), (*planesLock).planes[0].data);

+ framebuffer->getHardwareBuffer(), app1ExifData, outBufferSize, outBuffer);

if (!compressedSize.has_value()) {

ALOGE("%s: Failed to compress JPEG image", __func__);

return cameraStatus(Status::INTERNAL_ERROR);

}

+ // Add the transport header at the end of the JPEG output buffer.

+ //

+ // jpegBlobId must start at byte[buffer_size - sizeof(CameraBlob)],

+ // where the buffer_size is the size of gralloc buffer.

+ //

+ // See

+ // hardware/interfaces/camera/device/aidl/android/hardware/camera/device/CameraBlobId.aidl

+ // for the full explanation of the following code.

CameraBlob cameraBlob{

.blobId = CameraBlobId::JPEG,

.blobSizeBytes = static_cast<int32_t>(compressedSize.value())};

- memcpy(reinterpret_cast<uint8_t*>((*planesLock).planes[0].data) +

- (stream->bufferSize - sizeof(cameraBlob)),

- &cameraBlob, sizeof(cameraBlob));

+ // Copy the cameraBlob to the end of the JPEG buffer.

+ uint8_t* jpegStreamEndAddress =

+ reinterpret_cast<uint8_t*>((*planesLock).planes[0].data) +

+ (stream->bufferSize - sizeof(cameraBlob));

+ memcpy(jpegStreamEndAddress, &cameraBlob, sizeof(cameraBlob));

ALOGV("%s: Successfully compressed JPEG image, resulting size %zu B",

__func__, compressedSize.value());

diff --git a/services/camera/virtualcamera/util/EglDisplayContext.cc b/services/camera/virtualcamera/util/EglDisplayContext.cc

index 166ac75..54307b4 100644

--- a/services/camera/virtualcamera/util/EglDisplayContext.cc

+++ b/services/camera/virtualcamera/util/EglDisplayContext.cc

@@ -54,7 +54,9 @@

EGLint numConfigs = 0;

EGLint configAttribs[] = {

EGL_SURFACE_TYPE,

- nativeWindow == nullptr ? EGL_PBUFFER_BIT : EGL_WINDOW_BIT,

+ nativeWindow == nullptr

+ ? EGL_PBUFFER_BIT // Render into individual AHardwareBuffer

+ : EGL_WINDOW_BIT, // Render into Surface (ANativeWindow)

EGL_RENDERABLE_TYPE, EGL_OPENGL_ES2_BIT, EGL_RED_SIZE, 8, EGL_GREEN_SIZE,

8, EGL_BLUE_SIZE, 8,

// no alpha

@@ -83,6 +85,9 @@

}

}

+ // EGL is a big state machine. Now that we have a configuration ready, we set

+ // this state machine to that configuration (we make it the "current"

+ // configuration).

if (!makeCurrent()) {

ALOGE(

"Failed to set newly initialized EGLContext and EGLDisplay connection "

diff --git a/services/camera/virtualcamera/util/EglProgram.cc b/services/camera/virtualcamera/util/EglProgram.cc

index 9c97118..47b8583 100644

--- a/services/camera/virtualcamera/util/EglProgram.cc

+++ b/services/camera/virtualcamera/util/EglProgram.cc

@@ -96,6 +96,7 @@

fragColor = texture(uTexture, vTextureCoord);

})";

+// Shader to render a RGBA texture into a YUV buffer.

constexpr char kExternalRgbaTextureFragmentShader[] = R"(#version 300 es

#extension GL_OES_EGL_image_external_essl3 : require

#extension GL_EXT_YUV_target : require